Earlier in the summer I discussed a security-oriented programming contest we were planning to run called Build-it, Break-it, Fix-it (BIBIFI). The contest completed about a week ago, and the winners are now posted on the contest site, https://builditbreakit.org.

Here I present a preliminary report of how the contest went. In short: well!

We had nearly a dozen qualifying submissions out of 20 or so teams that made an attempt, and these submissions used a variety of languages — the winners programmed in Python and Haskell, and other submissions were in C/C++, Go, and Java (with one non-qualifying submission in Ruby). Scoring was based on security, correctness, and performance (as in the real world!) and in the end the first two mattered most: teams found many bugs in qualifying submissions, and at least one team was scoring near the top until other teams found their program did not pay much attention to security.

We have much data analysis still to do, to understand more about what happened and why. If you have scientific questions you think we should investigate, after reading this report, I’d love to know them. In the end, I think the contest made a successful go at emphasizing security is not just about breaking things, but also about building them correctly.

Recap: The BIBIFI contest rules

I described the contest in detail, and its rationale, in my earlier post. To recap, briefly: The contest aims to evaluate how well students can write secure programs (that are nevertheless featureful and efficient), and how well they can find defects in security-minded programs.

The BIBIFI contest is broken up into three rounds.[ref]Originally, the goal was for the rounds to be 72 hours long, but we ended up course-correcting, as discussed in the As it unfolded section.[/ref] During the Build It round, builders write software that implements the system prescribed by the contest. To qualify for the next round they must pass a minimal set of performance and correctness tests; for more points, they can try for more efficient implementations and/or they can implement optional features. In the Break It round, breakers find as many flaws as possible in the qualifying Build-It implementations, submitting failing test cases as evidence of flaws. During the Fix It round, builders attempt to fix any problems in their Build It submissions that were identified by other breaker teams. Doing so helps us adjust the scoring by determining which of the submitted test cases are exposing the same underlying defect (since fixing a single bug could cause several test cases to pass).

There are two sets of prizes: first and second prize for the best build-it teams, and first and second prize for the best breaker teams. Teams can participate in either or both categories, and the scoring was kept separate for each.

The contest problem

For this run of the contest we wanted a problem that was conceptually simple, would be feasible to implement in a couple of days, and had security as an explicit goal. We chose to have participants implement a secure log to describe the state of an art gallery: the guests and employees who have entered and left, and persons who are in rooms. The log is used by two programs. One program, logappend, appends new information to this file, and the other, logread, reads from the file and display the state of the art gallery according to a given query over the log. Both programs use an authentication token, supplied as a command-line argument, to authenticate each other. That is, to work, invocations of logappend and logread must provide the authentication token the log was created with. The implementation must ensure confidentiality: without the token, an adversary should learn nothing about the contents of the file even if he has direct access to the file. Likewise, it should ensure integrity: without the token, an adversary should not be able to corrupt a file in a way that convinces logread or logappend (when using the correct token) that the log is reasonable.

During the build-it round, the participants’ submissions were graded on performance, according to their rank order among qualifying implementations. Performance criteria included latency and log file size following a series of operations (in both cases, smaller is better). Participants could also implement extra features, which amounted to added queries in logread, for extra points.

During the break-it round, teams could submit security-related test cases that demonstrated a violation of confidentiality (by proving they knew something about a pre-populated log that they shouldn’t), integrity (by corrupting a log without the logread and/or logappend programs knowing it), or an exploit (by causing either program to core dump). Normal bugs also were worth points (since they could ultimately also be security related, we just don’t know), but only half that of security bugs.

The results, from 30,000 feet

The BIBIFI contest started on August 28. Here are some factoids about it, some of which are discerned from the final scoreboard:

- 90 teams were formed for the contest (comprising 166 people), and several dozen others registered but never managed to form a team. Students who registered for the competition were affiliated from 61 universities from across the USA.

- 28 teams (totaling 64 people) worked on a Build-It submission for the first round of the contest. Of these, 11 teams qualified (totaling 28 people) for consideration in the second round, by virtue of passing all of the gateway correctness and performance tests. Qualifying teams’ programs were written in Java, C/C++, Go, Python, and Haskell. The two winning teams used Python (first) and Haskell (second). Python was the most popular language, chosen by 6 teams.

- 9 teams (25 people) competed in the break it round. Of these, 7 teams scored points by finding bugs in build-it submissions. Student teams largely employed testing and manual inspection to find bugs. The second-place team, however, also used static analysis and fuzz testing. In total, Break-it teams found 178 unique bugs in qualifying submissions.

- We also had two professional teams (from Cyberpoint and AT&T) participate in the Break-it round. The bugs they found counted against the Build-it scores, but did not affect student Break-it teams’ scores. Professional teams also used fuzz testing. The winning student teams did better than both professional teams.

- 3 Build-it teams managed to earn points back by fixing bugs during the Fix it round. Doing so dropped the total number of unique bugs from 200 to 178.

As it unfolded

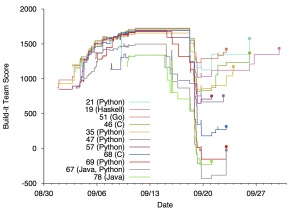

This is a timeline showing the contest as it unfolded, from the point of view of the build-it teams.

The x-axis of the chart is the date, with the starting point of the contest on the left, and the y-axis is the score. Each line is one build-it team, so the chart shows the score fluctuations over time. Teams appear when they first qualify, by passing all of the core tests, which nets them at least 1000 points, plus performance bonuses and optional features.

Build-it Round

The build-it round went from 8/28 to 9/9. We had originally planned for each round to be about 72 hours, but we found after the first round was about to end that only three teams managed to finish the project. So we extended the first round by a little over a week, and extended the other rounds by a few days.

We can see the steady buildup from the left. This tracks teams as they continue to gain points for implementing optional features. In the end, nearly every qualifying team implemented every optional feature; only teams 47, 67, and 78 did not, and you can see at 9/9, when the build-it round ends, their scores are well below the cluster at the top. The difference among the top lines is performance.

One interesting data point is not on the chart: One team was qualifying, with a very good score, just 5 minutes before the end of the round, but then submitted something that caused all of the qualifying tests to fail. This was unfortunate, but making major changes with five minutes to go was also unwise; nevertheless, in the future we may start accepting the most recent qualifying submission.

Break-it Round

The Break-it round went from 9/11 to 9/16. You can see scores starting to drop during this round, but they continue to drop afterwards. This is because correctness-oriented test cases submitted by break-it teams needed to be vetted by judges (to confirm they were legitimate tests) and this work was done after the round ended.

It is striking how several submissions’ scores fell precipitously. The bottom four teams suffered from both security and correctness bugs; none of them implemented security defenses at all; e.g., the log file was just a serialized data structure. In all, six teams were found to have security vulnerabilities, and every team had at least some correctness bugs. Note that team 46 implemented their submission in C, but had no security bugs. On the other hand, team 68 also used C, and was very close to the top prior to the break-it round, but its score dropped precipitously when found to be without security.

Fix-it Round

This round went from 9/19 to 9/23. Several teams fixed bugs that improved their scores, and you can see that happening shortly after the round, as the judges ensured that each fix addressed only one bug. During and after the fix-it round there was a period during which teams could dispute test cases that were deemed legitimate by judges; in many cases this legitimacy was overturned, so we see many scores rise. Likewise we resolved disputes about judgments on fixes. In the end, these resolutions had a major impact on the scores — on the last day, you can see that a couple of dispute resolutions determined the second-place winner.

Lessons

I am happy with how the BIBIFI contest turned out, but there are several things we are going to do differently next time.

The rounds need to be longer, and scheduled at a better time of year. Several teams, in our post-contest survey, told us that they did not end up participating because of scheduling and time pressure. Extending the first round was crucial for attracting many more participating teams.

We need to increase automation. Judging whether test cases and fixes were legitimate worked with a dozen or so teams, but will not scale beyond that. Judges also sometimes ruled inconsistently, resulting in many decisions being overturned. Automation can address both issues.

Too many points were lost for bugs in corner cases of the specification that were misinterpreted. We can and should tighten the spec in the future, but this can only go so far. A solution to this problem, and to the problem of inefficient and inconsistent judging, is to have an oracle implementation (hosted at a server) that teams can test against to confirm their intuitions about the spec, and confirm whether test cases are legitimate or not. Of course, we have to make sure this implementation is not exploitable, or we’re in trouble!

What’s next?

Now we have to do a deeper analysis of the data and see what we can learn from it. For example, how many bugs went unfound, and did these affect the final outcomes? What development processes did people use when working as teams (we have their Git histories)? What were the most effective techniques at finding bugs? And surely there are many more.

If you have questions you think we might try to answer, let us know!

Thanks!

I’d like to close by acknowledging my collaborators. I co-conceived BIBIFI with my Ph.D. student, Andrew Ruef, who was also a major designer and implementer of the infrastructure. M.S. student James Parker built much of the contest web site (written in Yesod for Haskell!) and made major contributions to all other aspects of the contest. Dave Levin, Atif Memon, and Jan Plane are co-PIs with me on NSF award 1319147, which funded the development of the contest. My co-PIs have all provided important contributions to many aspects of the contest, from scoring, to survey design, to problem descriptions, and more.

My collaborators and I would like to thank all who helped run this inaugural competition, from judges, to student problem vetters, to corporate sponsors. We couldn’t have done BIBIFI without you!

I love that idea! This is a great way to push forward security awareness, especially among young people.

I just had a look at the builditbreakit.org site, and here are some thoughts I’d like to share:

The site seems to be somewhat unmaintained. For example, the “About” section talks about the event as if it will happen in the future, including the appeal “Sign up now!”

Maybe there should be some after-competition information, such as the great analysis you did in this blog entry. Why isn’t this prominently of that page, featuring the last competition and probably announcing the next one?

Also, during the competition, was there any way for outsiders to share the thrill? For example, were outsider able to look at the current solutions after each round? Or before the next one?

Event if you don’t want to publish anything during the competition for some reason, I don’t understand why even in in hindsight no code is published, no list of found bugs is published, no fixes are published. Why is this?

Probably it helps to ask all participants to put their code under public domain or some free software license (e.g. ISC license).

I believe that future contestants could learn a lot from past submissions. What techniques did they use? Which bugs were found? How hard was is to fix those?

Also, this type of openness would help to reduce some of the “security by obscurity” mentality that we see all too often.

Did you find any evidence for or against the famous djb statement that striving for bug-free code does more for security than anything else?

Did you re-evaluate the contest rule that “normal” bugs count half as much as “security” bugs? Was this rule helpful or harmful?

(Helpful: Did the contestants pay more attention to security? Harmful: Were some “normal” bugs not taken seriously, which then turned out to be security relevant?)

Finally, how did you separate the “normal” from the “security” bugs? When in doubt, did you categorize bugs as “normal” or as “security”?

(For example, was every bug “normal” until somebody too the time to write an exploit? Or, was every bug considered as a “security bug” unless it was really undoubtedly clear that it is absolutely harmless?)